작업 시에, dask 키워드와 pandas 키워드가 다른 경우,

다른 동작하는 경우로 인해 메모리에 미리 올리던지 나중에 올리던지 오류가 참 많았음. dask를 적용할지 안할지 아직도 잘 모르지만.

헷갈리거나 다른 동작, 오류들은 정리해야 할 필요가 있음.

결론부터 원한다면 : https://www.shiksha.com/online-courses/articles/pandas-vs-dask/#dask 에서 참고 할 것.

Pandas

install

Python version support

Officially Python 3.9, 3.10, 3.11 and 3.12.

Installing from PyPI

pandas can be installed via pip from PyPI.

$ pip install pandas

$ pip install "pandas[excel]" 엑셀용

# Uninstall

$ pip uninstall pandas -y

# Running Test

>>> import pandas as pd

>>> pd.test()

running: pytest -m "not slow and not network and not db" /home/user/anaconda3/lib/python3.9/site-packages/pandas

============================= test session starts ==============================

platform linux -- Python 3.9.7, pytest-6.2.5, py-1.11.0, pluggy-1.0.0

rootdir: /home/user

plugins: dash-1.19.0, anyio-3.5.0, hypothesis-6.29.3

collected 154975 items / 4 skipped / 154971 selected

........................................................................ [ 0%]

........................................................................ [ 99%]

....................................... [100%]

==================================== ERRORS ====================================

=================================== FAILURES ===================================

=============================== warnings summary ===============================

=========================== short test summary info ============================

= 1 failed, 146194 passed, 7402 skipped, 1367 xfailed, 5 xpassed, 197 warnings, 10 errors in 1090.16s (0:18:10) =

# Required Dependencies

$ numpy

$ python-dateutil

$ pytz

$ tzdata

Installation — pandas 2.2.2 documentation

Installation The easiest way to install pandas is to install it as part of the Anaconda distribution, a cross platform distribution for data analysis and scientific computing. The Conda package manager is the recommended installation method for most users.

pandas.pydata.org

자주 쓰는 메서드

import pandas as pd

pd.read_excel()

pd.read_parquet('/path_of_parquet')

pd.read_csv('/path_of_csv')

df.head(n=5)

Return the first n rows.

df.tail(n=5)

Returns the last n rows.

df.sample(n=None, frac=None, replace=False, weights=None, random_state=None, axis=None, ignore_index=False)

df.info()

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 column_1 1000000 non-null object

1 column_2 1000000 non-null object

2 column_3 1000000 non-null object

df.describe(include='all')

# categorical numeric object

count 3 3.0 3

unique 3 NaN 3

top f NaN a

freq 1 NaN 1

mean NaN 2.0 NaN

std NaN 1.0 NaN

# 컬럼의 값이 50 초과인 데이터만 선택하기

condition = df['컬럼명'] > 50

df[condition]

# merge

df.merge(DataFrame or named Series A, B_df

how='inner', on=None, left_on=None, right_on=None,

left_index=False, right_index=False, sort=False, suffixes=('_x', '_y'),

copy=None, indicator=False, validate=None)

# pandas append ( a -> b 순서 )

df.append(B)

dask -> dd.concat(a, b, axis=0 or axis=1) # row 기준(default) 또는 col 병합

# 컬럼 목록

df.columns

# 비어있는 값에 0 입력하기 - * 병합 시, 캐스팅에 에러가 날 수 있음에 유의 *

df.fillna(0)

# s3 put object (s3 upload)

import boto3

def foo(self):

s3 = boto3.client(

's3',

aws_access_key_id='key',

aws_secret_access_key='password',

region_name='region',

endpoint_url='http://127.0.0.1',

verify=False

)

s3.put_object(Bucket=bucket_name, Body=body, Key='/ThefileNameOf.parquet')

Dask

What is Dask?

Dask is a Python-based parallel computing library for data analytics that can run on anything from a single-core computer to a sizable cluster of machines. By offering a high-level interface for parallelism and distributed computing.

Dask offers Dask Arrays and Dask DataFrames as its two primary data structures. Dask DataFrames are an extension of Pandas DataFrames, just as Dask Arrays are an extension of NumPy arrays.

These data structures offer a mechanism to distribute computations over numerous cores or machines to parallelize operations on huge datasets.

# install

# $ python -m pip install "dask[complete]" # Install everything

# Stop Using Dask When No Longer Needed

# In many workloads it is common to use Dask to read in a large amount of data, reduce it down, and then iterate on a much smaller amount of data. For this latter stage on smaller data it may make sense to stop using Dask, and start using normal Python again.

df = dd.read_parquet("lots-of-data-*.parquet")

df = df.groupby('name').mean() # reduce data significantly

df = df.compute() # continue on with pandas/NumPy

# Converting an existing pandas DataFrame

dataframe.from_pandas()

# Loading data directly into a dask DataFrame: for example

dataframe.read_csv()

++

import dask.dataframe as dd

import pandas as pd

# pandas로 읽어서 dask 객체로 변환한다.

df = pd.read_csv(" /File Path ")

ddf = dd.from_pandas(df, npartitions=10)

"""

compute() 시점에 메모리에 로딩+ 파이썬 객체로 반환되기 때문에 docker + pandas 환경에서 발생하는 oom 에러가 발생하지 않음.

persist() 는 메모리 로딩 + Dask 객체로 유지하므로, 이후 추가 연산에서 더 효율적임.

pandas와 문법에서 크게 차이 없음

1. lazy evaluation 방식으로 처리 + 작업 그래프 구성

--> 이후 compute() 호출 시에 실제 계산 수행하지만, pandas는 즉시 연산을 수행함.

2. 병렬처리를 지원하므로, 대규모 데이터셋에 효과적

"""

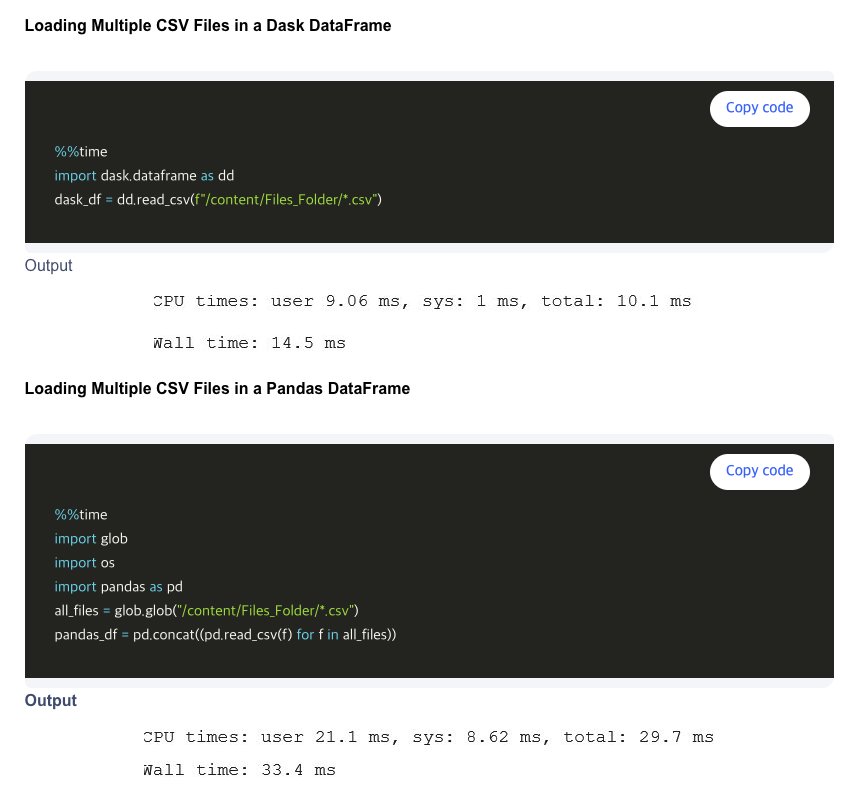

속도 비교

참고 :

Pandas vs Dask -Which One is Better? - Shiksha Online

Find the main differences between Pandas and Dask. Pandas is the first tool that comes to mind when discussing data processing with Python. Most Python libraries for data analytics, such as NumPy, Pandas, and Scikit-learn, are not made to scale beyond a s

www.shiksha.com

Dask — Dask documentation

Dask can set itself up easily in your Python session if you create a LocalCluster object, which sets everything up for you. from dask.distributed import LocalCluster cluster = LocalCluster() client = cluster.get_client() # Normal Dask work ... Alternativel

docs.dask.org

'PYTHON' 카테고리의 다른 글

| [Python] pycharm pro / django 환경 변수 (0) | 2023.05.16 |

|---|---|

| [Python] Python 프로젝트 세팅 및 Django 설정 (0) | 2023.03.18 |

| [Python] django ktx project 계획 (0) | 2023.02.19 |